CNN TSC

A CNN for time-series classification

A Convolutional Neural Network for time-series classification

This post implements a CNN for time-series classification and benchmarks the performance on three of the UCR time-series.

CNN's are widely used for applications involving images. In this post, I show their performance on time-series. This project is a rebound after this implementation of LSTM's on the same data. The LSTM's only got 60% test-accuracy, whereas state-of-the-art is 99.8% test-accuracy.

Mechanism

Many of our knowledge and intuition for CNN's on images carry over to time-series. The main difference in the code is the stride argument we pass to the conv-layer. We want the kernel to stride along the time-series, but not along the second dimension that we would have used for images.

Batchnorm

This projects is also a showcase of batch normalization after the conv-layers. With help of this post on StackOverflow, the code is equipped with three batch-norm layers.

A small code-snippet for illustration:

ewma = tf.train.ExponentialMovingAverage(decay=0.99)

bn_conv1 = ConvolutionalBatchNormalizer(num_filt_1, 0.001, ewma, True)

update_assignments = bn_conv1.get_assigner()

a_conv1 = bn_conv1.normalize(a_conv1, train=bn_train)

- ewma is the moving average function that monitors the mean and variance for use at test-time

- ConvolutionalBatchNormalizer is the class whose instances are batchnorm-layers. Documentation for this class is included in the project

- train=bn_train is a boolean operation distinguishing train-time and test-time for the batchnorm-layer

Performance

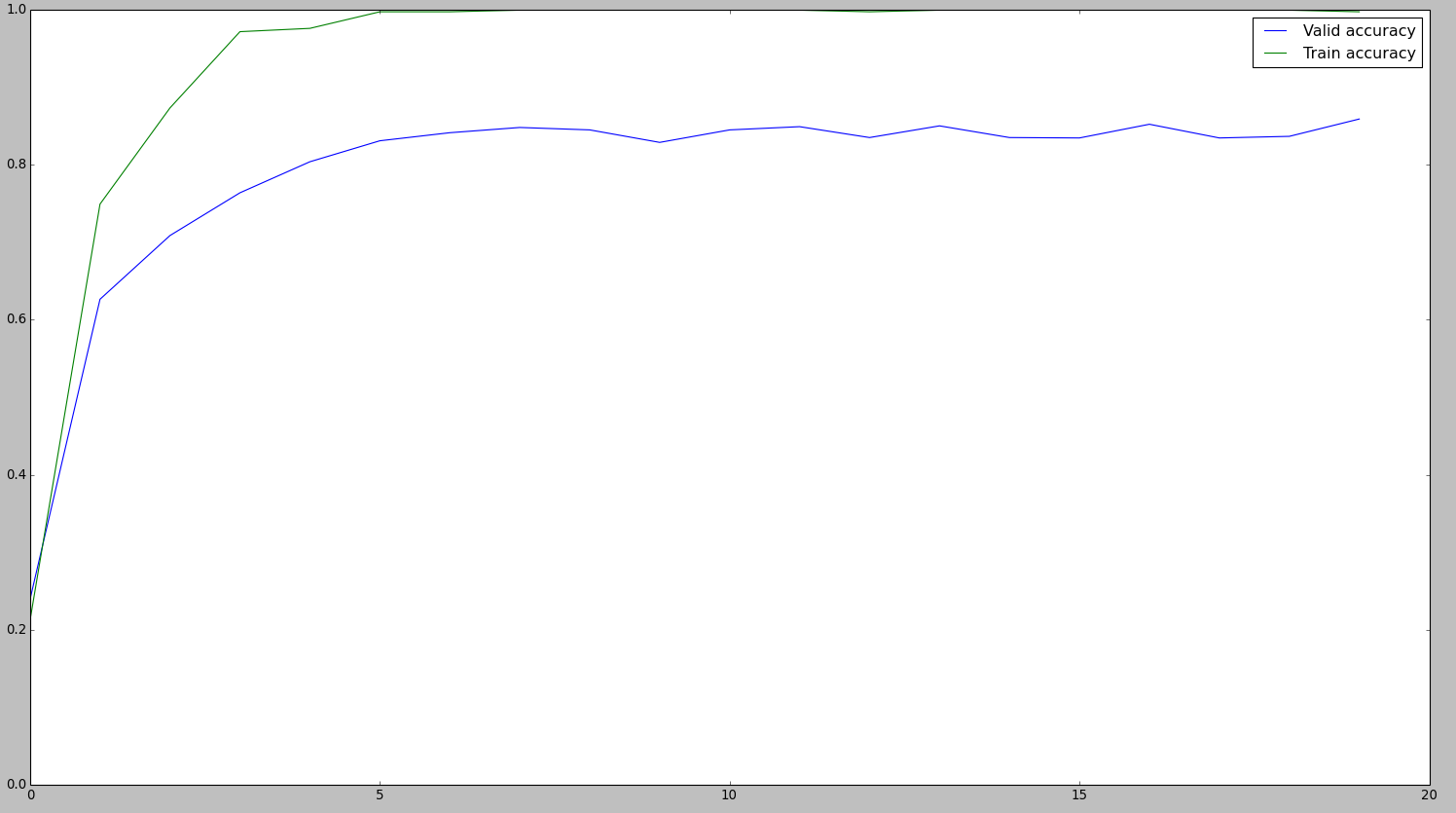

We benchmark the implementation on the datasets from UCR. Specifically, we achieve 99.9% and 84.9% test-accuracy on Two_Patterns and ChlorineConcentration. Both performances have remarkable consequences.

- The CNN achieves 99.9% test-accuracy on Two_Patterns, beating our own implementation of an LSTM on the same dataset, which got only 60%. Over the next months, I'll work on another three time-series projects. I hope to get back to this result and explain why the LSTM unperforms and the CNN overperforms on this dataset. Side note, this article got only 99.8% test-accuracy

- The CNN achieves 84.9% test-accuracy on ChlorineConcentration. This results improves on this article, where the best performance was 79.7% and the second best at 74%. An improvement of 5.2% seems significant and I am interested to discuss this result with the researchers. By no means am I trying to attach anyone, I am eager to learn from other people's reasonings and design decisions.

As of 10-April-'16, we also achieve 91.0% test-accuracy for FaceAll, while this article reporting 89.5% as best performance

Results

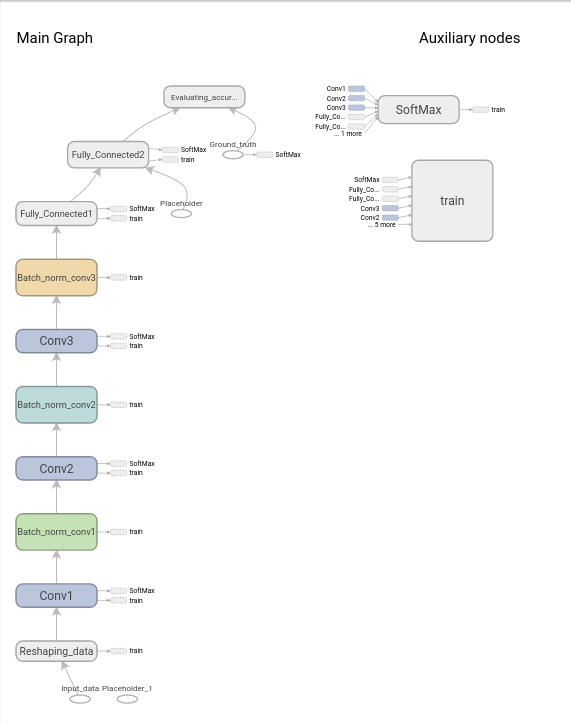

The graph of the CNN:

The evolution of accuracies based on a run with ChlorineConcentration

The evolution of accuracies based on a run with ChlorineConcentration

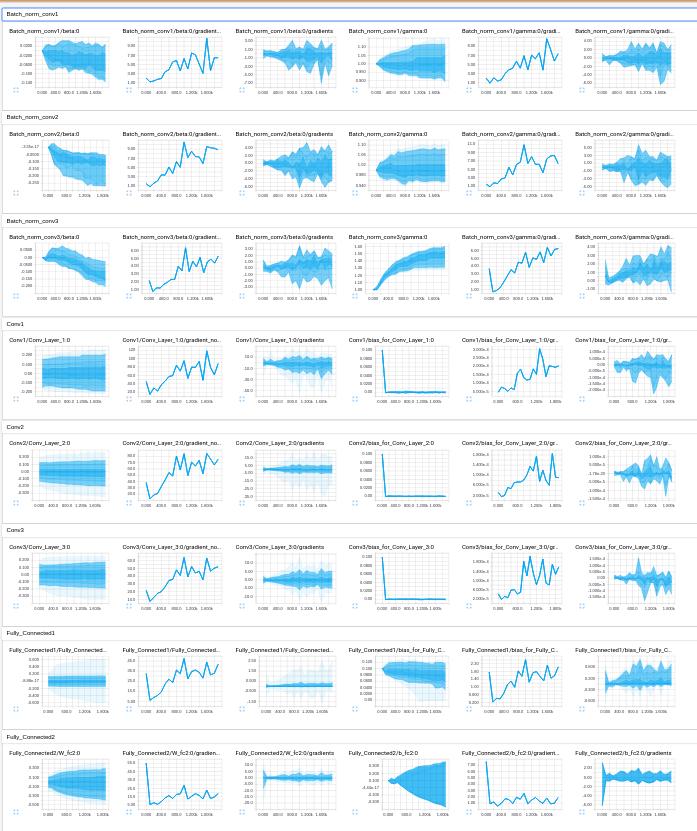

Showcase of TensorBoard

Showcase of TensorBoard

As always, I am curious to any comments and questions. Reach me at romijndersrob@gmail.com